2013-07-02

Progress on Moz2D

So again it's been a while since I've last posted on here, I guess anyone still reading my blog has probably gotten used to that so it's okay! I figured it was about time I'd update people reading along with some of the stuff that I've been working on lately(of course with the help of the rest of the Graphics team and others!). I will be using the term Moz2D in this post to refer to our new graphics API that was formerly known under the name 'Azure'.

Moz2D Stand-Alone Build & Repository

In order to make it easier for both ourselves, partners and potential 3rd parties interested in using Moz2D to build and work with the Moz2D API, I now have a stand-alone repository that contains the Moz2D basic library code, the player and some testing applications. The exact status of this repository is still to be determined, and no automation is applied to it yet, however we are making sure it keeps building and remains up to date! It can be found here. Some basic build instructions are available here, I will try to post more detailed build information, also for the different dependencies such as Cairo and Skia, somewhere in the coming weeks.

Direct2D 1.1 Integration

We've been working towards a Direct2D 1.1 backend in Moz2D. Direct2D 1.1 was included with Windows 8 and is provided as an update to Windows 7 users. It includes a wide range of API additions, several of which give us the ability to implement the Moz2D feature set with reduced and cleaner code. In addition to that some of the API additions should promise some performance improvements. We have refrained from using some of the new APIs in the Direct2D 1.1 implementation as of yet however, since at the moment they seem to increase the complexity without offering the expected performance benefits. As we begin rolling out Direct2D 1.1 integration to actual firefox users I'll attempt to provide more information on what we discover along the way.

Moz2D Recording & Performance Analysis Improvements

Another thing that we've been working on has been increased recording abilities as well as an increased ability to get useful information out of these recordings. Although the priority for this has been relatively low, steady improvements are being made and it has proven to be a large asset in the creation of new Moz2D backends.

First of all it's now possible to create a recording of a specific page using a single command when using Firefox nightly, an example looks like this (assuming 'recording' is a valid, clean profile of firefox):

firefox -no-remote -P recording -recording file://C:\Users\Bas\Dev\mytest.html -recording-output mytest.aer

We're currently still working out some problems which will often cause recordings to be terminated prematurely and therefore come up empty. But this should be fairly easy to fix. Do note this needs to be done on a system where Moz2D content is enabled, for now, this is only windows, although it should soon include OS X as well!

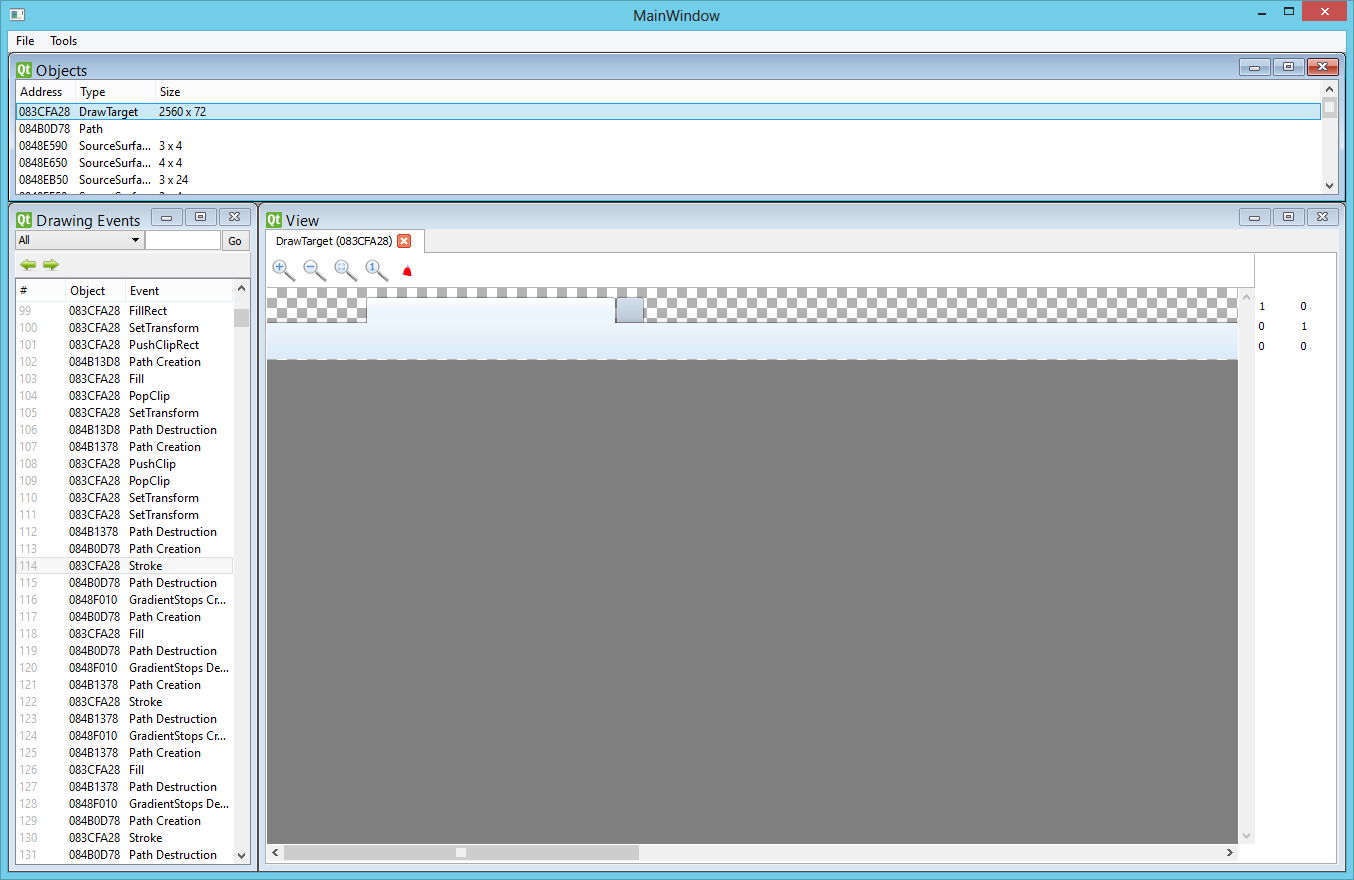

Once the recording is completed several (experimental) possibilities have been added. First of all the Moz2D player has gotten additional features, see a screenshot below for the updated look:

Note that it's now possible to replay the recording with different drawing backends (depending on your build). This easily makes it possible when adding backends to find flaws in new implementations by comparing drawing results with established backends. We've also added the possibility to analyze timings on a per-call basis to find performance bottlenecks for different backends. In addition to that a separate application called 'recordbench' has been added which is in a very early stage, this essentially is a very simple tool that reports timings for a recording across a range of backends. Trough this application we should be able to do automated performance comparisons between different drawing backends.

Finally we've added a micro-benchmark suite that allows us to make detailed performance comparisons on a per-backend basis. The intention of all this being to give us the ability to make well-informed decisions in the future as to what drawing backends to prefer on specific platforms/configurations.

This isn't necessarily all that we've been doing and I'll do my best to provide additional updates in the near future!

2012-09-28

Presenting the Azure Drawing Recorder

Well, it's been a while since I've posted here, so it's about time I had something interesting to report! First of all I'm afraid over the last 3 months my blog gathered over 30 000 spam messages. This made it impossible for me to reasonably deal with them, and filter out useful comments. I've had to delete all comments, including possibly well-intended ones. I've since added a captcha and additional anti-spam measures, so things should be better now!

On to what this post is all about, a little over two months ago we had the Skia team visit us in the Toronto office. While there they showed us some of the neat things they're doing with Skia, and one of the things they were doing looked pretty cool! Essentially it was a tool that could take a recorded drawing stream and inspect it, it really made me feel like a powerful debugging tool for drawing sequences could help us out as well. We already have some of these tools already in the 3D drawing area (think of OpenGL APITrace or DirectX's PIXWin (soon to be the Visual Studio Graphics Debugger), however the calls seen there can be pretty hard to correlate with 2D drawing instructions due to batching and other mechanisms.

So this is what I started working on. Since Azure didn't have any recording functionality yet I had to add that first, that's mostly done now and that work is landed in nightly. As a result nightly can now produce recorded drawing streams. The way in which these streams are produced is still a little bit constrained, the following limitations apply:

- You must be using Azure content drawing, that is currently Direct2D(accelerated) on Windows Vista+ only

- The 'gfx.2d.recording' pref must be added and set to 'true'

- After the browser is restarted -all- drawing will be recorded until closing

- The stream is always stored in the file 'browserrecording.aer' in the current working directory

- Storing everything means storing all bitmaps drawn, and all font truetype data, this can make the file grow big, currently no compression is used

- It may crash when webfonts are used

These are issues we aim to fix in the future, some are easier to fix than others, so look for updates on this. For now, if you have a machine that's capable of Direct2D you can add the 'gfx.2d.recording' pref and set it to true to enable it when you're curious about how we're drawing a certain site. You can then go to the site you want to know more about and close the browser. Your working directory will now contain the 'browserrecording.aer' file (rename/move this if you don't want it to be overwritten next run!).

As this file is stored in a binary format, in itself this isn't actually very useful to you! This is why I've also created a simple debugger. This is in the early stages of development, its UI is not great, nor very intuitive, and functionality is limited at this point. Although it should prove sufficient to be useful for debugging certain types of graphics bugs. I built it on top of Qt and the source code is available here. You can currently find a stand-alone build here, and you might also need the new VS2012 redistributables available here. To make it a little easier to explain, let's start with a screenshot of the main interface.

Now I'll proceed to explain what's currently available in the interface, this might be somewhat lengthy, so if you were just here for the cool pictures feel free to browse on! It might also require some very basic Azure knowledge. I should follow up at some point with a blog post explaining some of the basic terminology.

There's currently three distinct areas in the player:

Drawing Events

The Drawing Events area to the left represents the drawing events present in this stream, clicking any drawing event in there will replay drawing to the corresponding point in the stream. Keep in mind here that moving forward in time is faster than moving backward, when moving forward we only need to execute the 'new' drawing commands, whereas when moving backward we need to replay from the start (a drawing command can't really be 'removed'). This might be fixable in the future by storing 'keyframes' every so many drawing events.

In the columns you will see the current drawing event 'ID' (its position in the sequence), the 'object' that drawing call applies to (in case of object creation or destruction the object that was created/destroyed, otherwise the DrawTarget a drawing call executed on), and the type of drawing event.

In the top there's a text input, which allows you to jump directly to a certain drawing event 'ID', as well as back/forward buttons to jump through events you've recently visited, and a dropdown box which allows you to filter on events coming from a specific object.

Objects

This is the list of objects alive at the currently selected point in the drawing stream. Asides from being useful in debugging lifetime issues and showing some (currently very rudimentary) information about the objects listed this can be used to do a number of other things. One of those things is that you can double click several types of objects to open them in the 'View' area, which I'll explain more about later. In addition to that an object can be right-clicked in order to filter the Drawing Events list based on that object.

View

In this area relevant information can be viewed on an object. In the screenshot shown above we're currently showing the DrawTarget where the UI is being drawn at this point in time. We can do the obvious zooming and in and out of DrawTargets and SourceSurfaces. In addition to that for DrawTargets we display the currently set transform to the right, as well as there being a switchable button at the top that will visualize the currently set clip (it will illuminate it in bright red!). This area, just as the objects area is live and what is shown here will update as you navigate through the drawing events.

So that about covers the basics! Under 'Tools' there is an experimental feature called 'redundancy analysis', that is currently very experimental, if you do decide to play with it keep in mind it will corrupt the heap of the player :-)! Once I've worked on this a little more I will talk about it in a follow up blogpost. Again, this is all in a very early stage and suggestions and contributions are all very welcome!

But this is all windows-only :(

That's true! Currently the recording side of things, that is present on mozilla-central is available everywhere if you force Azure content on, on those platforms, however fonts currently do not work outside of windows, and Azure content is not yet ready to be used for browsing yet on non-windows platforms. The player however is currently only available on windows. Although it has been developed based on Qt and should work on other platforms, it uses the Azure stand-alone build which currently only has a Visual Studio project file and only supports Direct2D.

I'm very interested in having the stand-alone library and test-suite supported on other platforms, and as such if you want to help out in this area please let me know! I'll be more than happy to provide more detailed instructions and help out.

That's all for now! I have a lot of other ideas to work out with the new recording system, I'll hopefully be able to cover more of those here in the near future.

2012-03-28

Solving Indentation Pains for Visual Studio 2010

So, I've been working on a lot of things lately that would not be very interesting at this point to share with you all. However I've now ran across something that might actually be useful for others.

Anyone that has ever worked in Mozilla code knows that because of various reasons indentation depth and type are not very consistent across the tree. Some code is 4 spaces indent, other code is 2 spaces indent, and Cairo for example is 4 spaces indent with an 8 space tab-width. Now luckily, most of our files actually have a modeline describing the supposed indentation settings for that file. However Visual Studio has no default way of using this information (or at least, I haven't found it).

After having made another mistake in indentation causing me significant pain by having to go back and delete spaces all over the places I decided to write a little Add-in for Visual Studio that reads the emacs modeline and sets the editor settings accordingly. I added a little easy installer so other people can hopefully use it to make their lives easier as well. Note that after installing 'Tools->Add-in Manager' can easily be used to (temporarily) disable it. Although you can of course also uninstall it. There's a couple of caveats I'd like to share:

- It only reads modelines in the first line of the file. And its parsing isn't 100% correct (it works fine for all cases I found in the mozilla tree).

- If it does not find a modeline in a file it sets your editor to a default: No tabs, 2 space indent, 2 space tab-width.

- A lot of files in the Mozilla tree specify tab-width 20. Although this is fine since they don't use tabs, if you're like me and have multiple files open in Visual Studio at a time, setting the tab-width to 20 will make those files appear a little funky if they do contain tabs.

- The installer only works with Visual Studio 2010 and only installs the Add-in for the current user (it does not require elevation)

- When uninstalling the installer removes the Documents\Visual Studio 2010\Addins folder if it contains no other add-ins. This shouldn't actually matter.

Getting Visual Studio to output an installer the way I wanted it (no elevation prompt, etc.) turned out to be pretty painful! So I'm sure the installer isn't very good, but it gets the job done.

You can find the installer here, hopefully it will help you as it helps me! If you have any suggestions let me know.

2011-09-22

Direct2D Azure hits Firefox 7

Hrm, Azure, what's that again?

You can find out all about Azure other blog posts, there's an introduction from Joe Drew and there's several more in detailed posts discussing the Direct2D Azure backend and the performance implications to be found on my blog. The bottom line is that we're working on a new graphics API that will be used for rendering in Gecko.

What does that mean for Firefox 7?

Well, we're currently only using it with Direct2D and when using canvas. This allows us to stress test it, although a wide array of tests has been run, and it has been in use by our Aurora and Beta testers for a while now, there might still be issues we might have missed. If these issues show in the final release we'll only have caused a regression in Canvas and for a limited subset of our users, rather than in all browser rendering. The bottom line is you should generally see a speed improvement using 2D Canvas in Firefox 7 when using Windows 7 or Vista with a sufficiently powerful graphics card.

So what's next, what's the status?

We're currently working hard on both a Cairo and a Skia backend for the Azure API, this means we'll be able to use the Azure API on all platforms. Possibly getting some quick performance benefits on platforms where Skia outperforms the cairo backends we're currently using. At the same time we're working on creating a layer that will allow controlled migration of all our content drawing code from the current 'Thebes' API's to the new Azure API. Once that is done webpage rendering in general can start taking advantage of all the latest work!

That's about all I have for you right now, enjoy!

2011-08-16

Releasing Azure

We've been working hard over the last few months to get Azure(canvas use only at this time) ready for shipping. As Firefox 7 has been in the aurora stage for a while now, it's safe now to say that there's a very good chance that Azure will be shipping with Firefox 7! I'd like to use this opportunity to say a little bit more about how Azure will be present in Firefox 7 and the road ahead.

Awesome! So what does it mean?

Well, for those of you reading my blog you probably have read my earlier post about Azure and the performance improvements it brings to several canvas demos. Those performance improvements are still valid! It should be noted though that not a lot of optimization work has gone into Azure for firefox 7 though. This means is that there is still some cases where traditional canvas performed a little bit better than Azure (particular with relation to shadows, this can be seen on some parts of the 'asteroids' benchmark, see bug 667317). However because of those caveats we don't want to delay bringing the improved performance on the majority of real world use-cases to our users! In addition because of the architectural step forward Azure is for us, it's great to start getting feedback from a larger user-base so we can move ahead from here with more bugs fixed and more confidence in the architecture.

Why would it not be in Firefox 7?

As part of our rapid release cycle (as many of you will probably know), we want to guarantee releases at regular intervals. This means that if in the beta stage a serious issue is found in Azure, we will disable Azure for Firefox 7. This is a good thing! It means that although Azure will have to wait a couple of weeks for Firefox 8, all the other improvements included in 7 will get to you in time.

So what's the way forward for Azure?

The Azure plan is still largely the same, the immediate short-term tasks we're focusing on are as follows:

- Create a Cairo backend to Azure, this will allow Azure to be usable on all platforms, allowing us to migrate more of our code to it.

- Create a 'Thebes' wrapper for Azure. Thebes is our current cairo-wrapper library, with this wrapper we can use Azure through our traditional rendering code, and progressively move code in our tree to use Azure 'natively'.

- Continue preliminary work on 'Emerald', our own, cross-platform, accelerated backend for Azure.

In addition to this, we've also decided to create an experimental Skia backend. This will allow us to do good performance comparisons, and of course on platforms where this can get us a performance improvement we'll be able to use more of the awesome work coming from the open source world!

That's all for now! Keep testing and don't hesitate to contact me, or even better, file bugs in bugzilla if you find any issues!