2011-06-06

Comparing Performance: Azure vs Cairo

You haven't posted in a while!

This is true! And I do apologize, I've been very busy and hope to post more again in the near future. I particularly apologize for comments which I might've missed, my blog started getting covered with spam comments and it became impossible to separate the good from the bad. I've mass deleted over 2000 spam comments and updated both the blog software and the antispam blacklist. Hopefully things will be better now.

What's Azure?

Azure is a new graphics component that we've been developing for use with Mozilla. Considering Joe Drew has already done an excellent blog post on the subject I won't be going into it in much detail. You can find his blog post here, if you haven't yet I recommend you read it before you continue reading this post! :)

So what's the current status?

Well, we set a goal for ourselves to implement the Azure API on Direct2D for Q2. So this is what I've been working on for the last couple of weeks, in addition to that we've created an implementation of Canvas2D that sits on top of Azure. The sum of that work resulted in a build of mozilla that can run a canvas implementation based on the new Azure API. This has allowed me to do performance and correctness comparisons between the current Canvas code based on the cairo Direct2D backend, and the new code based on Azure. The latest builds feature an implementation of canvas on the Azure D2D backend that is almost as good in terms of correctness as the cairo version. In some cases even better!

Almost? What's broken?

So there's a couple of issues that still need to be resolved, the main correctness problems are:

- No performance optimizations have been done, there's some pitfalls right now

- The Radial Gradient implementation does not follow the spec when the inner circle lies (partially) outside of the outer circle

- Global composite operations which are unbound by their source do not behave properly in the presence of a clip

- isPointInPath returns false for points which are exactly on the edge of a path

- Behavior of zero size canvases is not exactly as it should be

So, what about performance?

In general, you should see much improved performance for Canvas2D in a Direct2D environment. Almost any test will perform either at the same performance level, or better. Some tests will benefit greatly, while others will basically stay the same, this mainly depends on where the bottlenecks for the tests are. There are however still some performance pitfalls, where Azure may be slightly slower than Cairo. Most of these cases should be resolved in the coming time. At the moment also the initialization of Azure canvases is slightly more expensive than normal, this should easily be offset by improved performance though!

Some numbers

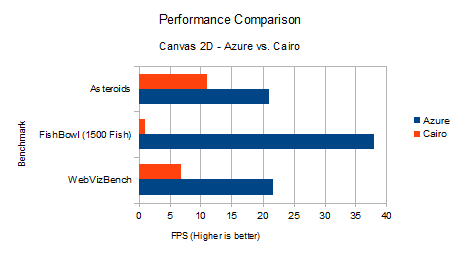

I ran several benchmarks comparing Azure canvas performance to the Cairo Direct2D backend canvas performance. Here's a nice chart:

In addition to these tests there were some tests which weren't easy to include in the chart since they didn't report frames per second. Two notable ones are the IE Testdrive 'Speed Reading' test, which ran in 6 seconds both with Azure and Cairo, however reported an average drawing time of 5 ms for Azure, and 8 ms for Cairo. Possibly the total time ended up still being the same due to the nature of timeouts in Firefox. A more outspoken difference was the IE TestDrive Paintball demo, which ran in 10.91 seconds on Azure versus almost 30 seconds on Cairo!

All in all we're very happy with these results and hopefully future optimizations will improve them further.

I want to see this for myself!

Well, you can! I've made a build with Azure available here. Using this build Azure will be enabled by default -if- you have Direct2D support. If your system does not support Direct2D (you can check in the 'about:support' page), this will function practically as a normal nightly build. You can switch azure on and off through the 'gfx.canvas.azure.enabled' preference on the 'about:config' page.

Some info for people who want to do their own testing:

- My testing was done on a dual Xeon E4650 with 24 GB of RAM and a Radeon 6970 GPU

- Performance of this build cannot be compared to a normal firefox build, as Profile Guided Optimization was not performed on this build

What's next?

Well, first we have to iron out the last quirks and get AzureD2D canvases shipped. Once that's done it will mark a major milestone in the Azure project. Soon to follow should be an Azure implementation in Quartz, bringing performance improvements to our Mac audience. The next, possibly most interesting step for Azure will be a larger project: creating a backend that can use the GPU for rendering vector graphics through OpenGL and different Direct3D versions. This will hopefully allow us to bring fast, consistent rendering performance to our users on all platforms!

Eventually all browser rendering, and not just Canvas, should run through Azure, further improving your browsing experience in Firefox!

2010-09-07

Firefox 4 Beta: Bringing Hardware Acceleration

With Firefox 4 getting closer and closer to release, we've introduced a new feature in the Beta: We've enabled hardware acceleration through Direct2D by default for our users using Windows Vista or Windows 7 and having DirectX 10 Compatible hardware. As some of our users might know, this feature has been available to them through a special preference for a while now, even as far back as the Alpha. However, after a lot of hard work from our Graphics team, we now feel confident enough to enable it by default for our users with compatible hardware.

So what is Hardware Acceleration?

Usually when we talk about hardware acceleration we mean using the graphics card of your computer to accelerate certain graphical operations. Nowadays the graphics cards in most people's computers have an immense amount of computational power, often many times more than the normal processor. This computational power is very specialized and cannot just be used for anything. It's most commonly used for video games, but obviously as web browsers use more and more graphical effects, we want to use it inside firefox as well!

What is Direct2D?

Direct2D is a rendering system part of the DirectX package which is shipped with Windows. It was introduced in Windows 7 and ported back to Windows Vista in the Vista Platform Update. It allows us to access the hardware with a simple 2D graphics drawing API for all mozilla drawing code, allowing hardware acceleration for a very large number of scenarios.

How will I see it's working?

You should notice that some pages are a lot faster and more responsive, in particular, pages that use advanced, animated graphical effects. For example, pages with transparency or that include transformations. In addition to that, you can see if it's currently turned on for your system by looking in 'about:support'.

Help! It broke my browsing experience!

Since we're currently still in Beta, it's not completely unfeasible that on your particular system hardware acceleration might cause you issues! Of course we don't want this to prevent you from using and testing our beta. If you're experiencing issues you can switch it off by going to 'Tools->Options->Advanced' and there you will be able to unset hardware acceleration.

Grafx Bot

So this thing might break your browsing experience, right? Well, that's something we'd love to hear about so we can fix those issues! We've created an extension especially designed to test our browser on your system, and it can be used to send us data on any unexpected behavior. There's a great post on it on JGriffin's Blog, the more people install it, the faster we can improve our support!

That's all well and good, but what about other platforms?

At this point in time we do not have a system such as Direct2D available on other platforms. However we are working hard on alternative approaches to use hardware acceleration on other platforms. You should expect to hear more on that soon!

2010-04-22

Direct2D: Investigating Cached Tessellations

So, at Mozilla we've been looking into more ways to improve our performance in the area of complex graphics. One area where Direct2D is currently not giving us the kind of improvements we'd like, is in the case of drawing complex paths. The problem is that drawing paths will re-analyze the path on every frame using the CPU, causing these scenarios to be bound mainly by the speed of the CPU. This is something we'd like to address in order to improve performance of for example dynamic SVG images, after all once you have analyzed a certain path once, you want to retain as much as you can from that analysis, and re-use it when drawing a new frame with only small changes.

Path Retention Support in Cairo

One of the things that needs to happen is we need to support retaining paths in cairo, in such a way that a cairo surface can choose to associate and retain backend specific data related to that path. Much like is already possible in cairo for surface structures. That is a task which has been taken up by Matt Woodrow and has been coming along nicely (see bug 555877) and I'm not going to spend a lot of time talking about this. What I am going to talk about is my investigation into how to put this to good use from a Direct2D perspective.

Tessellation Caching in Direct2D

When I started my investigation, I was hoping that perhaps ID2D1Geometry would have some level of internal caching. In other words, if I'd just fill the same ID2D1Geometry every frame, this would be significantly faster than re-creating the geometry each frame. For testing this I chose the following geometry, the geometry I chose here is fairly simple, but it has some intersections and some nice big curves, so tessellation should be non-trivial:

sink->BeginFigure(D2D1::Point2F(600, 200), D2D1_FIGURE_BEGIN_FILLED);

D2D1_BEZIER_SEGMENT seg[3];

seg[0].point1 = D2D1::Point2F(1100, 200);

seg[0].point2 = D2D1::Point2F(1100, 700);

seg[0].point3 = D2D1::Point2F(600, 700);

seg[1].point1 = D2D1::Point2F(100, 700);

seg[1].point2 = D2D1::Point2F(100, 200);

seg[1].point3 = D2D1::Point2F(600, 200);

seg[2].point1 = D2D1::Point2F(1400, 300);

seg[2].point2 = D2D1::Point2F(1400, 1400);

seg[2].point3 = D2D1::Point2F(600, 1000);

sink->AddBeziers(seg, 3);

sink->AddLine(D2D1::Point2F(30, 130));

sink->EndFigure(D2D1_FIGURE_END_CLOSED);

Sadly there seemed to be no caching going on, the only speed improvement I could see was from not creating the geometry, the actual rendering showed no performance benefits. However, as we are determined to see if it is possible to do something else to get the desired effect, our eye was caught by another D2D interface.

The ID2D1Mesh and its limitations

So Direct2D has a Mesh object, this is a device dependent object which can be created on a render target, and then filled with the tessellation of an existing geometry (with a certain transformation applied). I should note here that since this Mesh is a collection of triangles, the level of detail is determined by the transformation passed into Tessellate. This means that if you simply zoom in on the mesh, at some point curves will no longer be curves. This is the first limitation of Meshes, however for the purposes of this investigation I'm going to assume we will not scale and I'm simply going to be drawing the same untransformed geometry over and over again. In any case, more often than not we won't be scaling up significantly, and this isn't really a limitation, it just means we have to re-tessellate in some cases.

Now there's another limitation which is more problematic, Meshes only work with Direct2D render targets which have Per Primitive Anti-Aliasing disabled (From here on PPAA). PPAA is an analytical anti-aliasing routine, which is most likely part of the reason why tessellations are not cached by Geometries internally. Anti-Aliasing is important to us, non-AA drawing in Mozilla is rare, and without it things would truly not look so good! There is another option though, when drawing to DXGI surfaces, as we do, you can set the GPU to use Multi-Sample Anti-Aliasing(From here on MSAA) to do anti-aliasing.

MSAA vs. PPAA

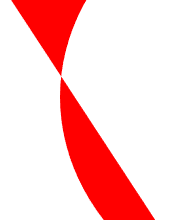

So, quality of MSAA is worse than that of PPAA, however it is also faster than PPAA on decent graphics hardware. But we'll get to analyzing the performance of several different solutions later, let's see about the quality. First of all, with no scaling:

|  |

Now for a bit more detail:

|  |

Notice the smoother transition from white to red on the left edge in the PPAA version. So there's most certainly a difference in quality, although MSAA isn't that bad either! (On some hardware it may be higher or lower quality due to hardware MSAA capabilities)

Another Limitation of MSAA

So at this point, we would be about ready to see about performance differences, except for one thing: MSAA is no longer used when you use PushLayer! The intermediate surface that gets created with PushLayer appears to not inherit the original surface's MSAA settings. Since we use Layers in order to do geometric clipping this poses another problem. We need to be able to do geometric clipping, while continuing to use our retained mesh, and with MSAA. To overcome this method in my investigation I've optionally used another method of clipping, I've created a texture with MSAA enabled (much like CreateLayer), and then I've created a non-MSAA texture, around which a SharedBitmap was created (so that it can be drawn to the main render target). When clipping, the geometry would be drawn to the MSAA texture, which could then be resolved to the non-MSAA texture, which was drawn into the clipping area using FillGeometry. The clipping area was chosen to be a single triangle, non-rectangular as to prevent any optimizations using scissor rects, but also to be trivial to tessellate so that the FillGeometry call for the clipping would not poison the measurement (optionally we could use FillMesh for the clipping area as well using this approach if we had a complex clipping path!)

Testing Conditions

- Core i7 920

- ATI Radeon HD5850

- Stand-alone skeleton D2D application

- MSAA x8 where MSAA is specified

- Surface 1460x760 pixels

- Drawn 100 times per frame

- 10 draws per clip where clipping is enabled

- All D3D multithreaded optimizations disabled

- Rendering as often as possible, no VSync, clearing once per frame

- No Mesh Measurements with PPAA (since it doesn't work)

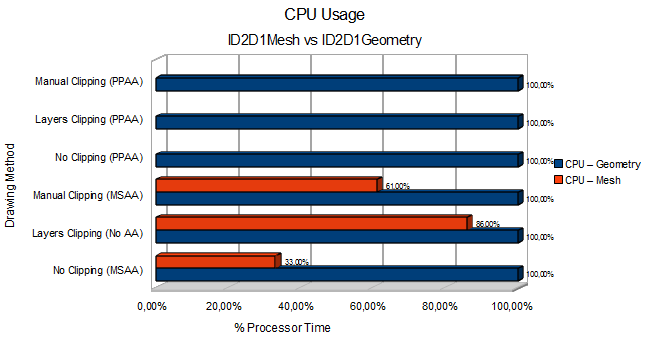

CPU Usage

As we can see there's a very consistent pattern: The CPU is consistently saturated for drawing the Geometry without cached tessellation. When we draw our existing Mesh, we can see a significant reduction in CPU usage and we supposedly become GPU bound.

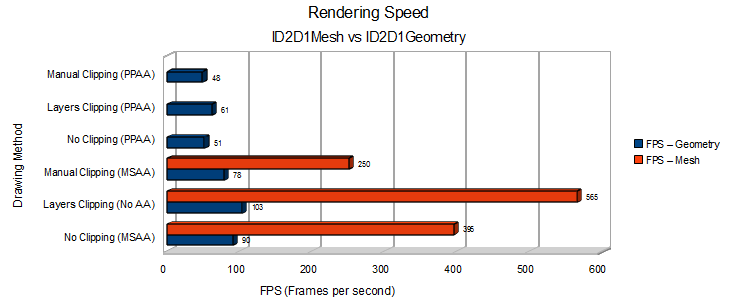

Rendering Speed

We can see that using the retained tessellation through a ID2D1Mesh can offer a significant performance benefit over using an ID2D1Geometry. Also note that drawing to a clipping layer appears to be somewhat faster than drawing to the backbuffer surface directly.

What do we see?

So these are the numbers. The cause of drawing to a clipping layer being slightly faster is most likely that a DXGI surface render target needs to do some degree of syncing that an internal D2D render target (created by PushLayer) does not.

We can clearly see that we can free up a lot of CPU when retaining tessellations of some complexity, even while we produce higher framerates.

One thing I've noticed is that BeginDraw and EndDraw take a lot of CPU, not doing these calls when using the intermediate clipping render target seemed to significantly reduce CPU usage (although of course the results are no longer guaranteed to be correct since EndDraw ensures that all rendering commands are flushed, hence this method wasn't used). Additionally using Flush on the render target rather than EndDraw before resolving the MSAA surface (which should in theory produce correct results) seemed to also lower the CPU usage by some degree, however due to the correctness being hard to judge in these cases I chose not to do the latter either. However there is room for further analysis here and perhaps an even further decrease of CPU usage in the Mesh rendering with manual clipping approach.

Any Conclusion?

Well, I can't really draw any conclusions from this at this point, there's a clear trade-off between performance and quality. It's certainly worth investigating further and possibly a 'mixed' approach could be used depending on the complexity of the path and quality requirements of the user. I realize this was a pretty long and technical post :) But I hope that for those of you interested in this sort of stuff I've been able to provide some interesting initial measurements in the area of complex geometry rendering in Direct2D. I'm looking forward to any opinions, criticisms, hints or other form of input on my methods and ideas!

2010-04-07

Firefox Video Goes Up To 11

So, I talked to you all a while ago about layers, and how we are going to be using it to accelerate composition of web pages across all platforms. There's more news on that front! Recently we landed a first version of the OpenGL layers backend onto trunk (See bug 546517). That backend included all the necessary code to use OpenGL for both image upscaling and YUV to RGB color space conversion.

Currently in general the code using layers is not at a point yet where it can benefit from the hardware layers backend for all rendering. For this reason the OpenGL backend is not used yet for your normal browsing. However as many of you may know the performance of fullscreen HTML5 video is not fantastic at the moment for lesser CPUs. Since fullscreen video in particular needs that extra push over the cliff, we decided to enable the OpenGL layers backend by default specifically for the fullscreen video case. What that means is that we upload the Y, Cb and Cr planes to your GPU, draw them to a fullscreen quad and then combine them to create the RGB image on your monitor. Ultimately what matters is that for those of you using compatible hardware and software it should lead to a big improvement in fullscreen video performance when using our latest Nightlies (get them here) and the upcoming Alpha!

Compatible Hardware And Software?

So, currently there's a little bit of work that still needs to be done to make OpenGL layers work on Mac OS X and Linux, so first of all you'll need Windows. Second of all you will need OpenGL 2 compatible drivers for your graphics hardware. Performance may vary across both hardware and drivers.

Eep! I'm running into issues

If you do run into issues with fullscreen video, do go to http://bugzilla.mozilla.org/ and check if your issue has already been reported. If it hasn't, we're very interested in hearing from you so we can address it as quickly as possible. Don't forget to note your graphics hardware and driver version, as this is invaluable information when trying to diagnose issues.

If you're interested in hearing more about layers, Robert O'Callahan has a very informative blogpost here.

So, the moment is finally there! I realize I've not posted on here for a while, my main reason being I wanted to be able to actually have something to tell you guys the next time I would talk about Direct2D. And it seems that now I do! After a lot of hard work from myself and many others at Mozilla who have been assisting me in getting Direct2D and particularly DirectWrite stable and functional enough for people to start trying it out, I believe our Direct2D support is now ready for the world to try their hands at!

If you download the latest nightly here you will be the proud owner of an official experimental build that has support for Direct2D. This build will automatically update to our new experimental nightly builds, giving you all the bugfixes we're working on for various problems that are still around.

Does that mean if I download the latest nightly I get Direct2D support?

Well, no. Since we don't want to regress any functionality in our browser for the majority of our users, by default your build will not use DirectWrite. There's a couple of steps you'll need to execute to get it working:

- Go to about:config

- Click through the warning, if necessary

- Enter 'render' in the 'Filter' box

- Double-click on 'gfx.font_rendering.directwrite.enabled' to set it to true

- Double click on 'mozilla.widget.render-mode', set the value to 6

- Restart

So once I've done this, surely I have this Direct2D support going!

Erm... sorry here folks, but no. There's several reasons why you could still not be having Direct2D support. First of all it's possible you have an extension interfering with Direct2D. Several extensions are known to somewhat disrupt our initialization order, meaning the preferences you've just set are not accessible during initialization of the render mode, which will cause GDI default render-mode.

Then there is another issue, if you're actually on Windows XP and not on Windows Vista or Windows 7, Windows XP actually has no support for Direct2D or DirectWrite, so it will fallback to being just a normal build. I suggest you upgrade :D.

Finally, if you do not have a high-end DirectX 9 graphics card, or a DirectX 10 graphics card, insufficient hardware support will be detected. This will cause the renderer to fallback to using GDI as well, in order to prevent you from suffering a slow browser, or even worse, a crash!

Now, all this reading, by now I must have Direct2D support?!

Right, if you satisfy all the above conditions, you're probably there! We don't have any way to verify at the moment (we're working on something), but one way is to go here. If it runs nice and smooth when you size photos up to fullscreen, it's working!

It is working! Now what?

You should enjoy your new browsing experience, in most cases you should have a noticeably faster and more responsive browser, particularly when using graphically intensive websites (not using flash, which will still be slow). If you find any bugs, please use our traditional reporting method of going to bugzilla and see if they've already been filed. If they haven't, file it, and we'll try to get to it and fix it as soon as possible!

There's a very cool forum thread that tracks the currently known issues, you should have a look there as well.

Well, that's about it!

I apologize to everyone for taking so long to get this ready for putting it out there, and for not providing experimental builds over the last months. The fact is its surprising how much you can do on the web that at least I didn't know about! And in how many interesting ways it can break ;). But I hope you can now all enjoy the latest and greatest of Direct2D builds. And no longer suffer any manual updating of your build! Happy Browsing!